Achieving AI Governance at Scale: A Comprehensive Approach

AI governance is a critical aspect for organizations aiming to integrate artificial intelligence into their operations. The exponential growth of AI systems within organizations necessitates a robust governance framework to manage associated risks and ensure compliance with dynamic regulatory landscapes. Strong governance protects against regulatory risk and builds trust among customers and investors, making it a compliance requirement and a competitive differentiator.

The Need for AI Governance

Organizations are increasingly adopting ambitious AI strategies, which are often highlighted at the highest levels, including board meetings and shareholder communications. However, the integration of AI comes with inherent risks, such as the potential loss of trust and the challenges of managing these systems with often small governance teams. The rapid expansion of AI models, particularly when foundational models are customized and used for multiple use cases, further complicates governance efforts. Insufficient oversight and ownership can result it model drift or bias, undermining the effectiveness of AI driven decisions.

Challenges in Scaling AI Governance

The primary challenge in scaling AI governance lies in managing the diverse and growing number of AI models and their use cases. Small teams are tasked with overseeing these models, which may be used in high-risk scenarios, such as resume filtering or customer interactions. Additionally, the regulatory environment is constantly evolving, requiring organizations to update their policies not only for AI but also for related areas like fraud and cybersecurity.

Building Institutional Knowledge

As organizations progress in their AI adoption journey, they accumulate valuable lessons that contribute to institutional knowledge. This knowledge must be harnessed to continuously improve AI processes and governance frameworks. The iterative process of developing, monitoring, and validating AI systems is crucial for enhancing the quality and safety of AI applications.

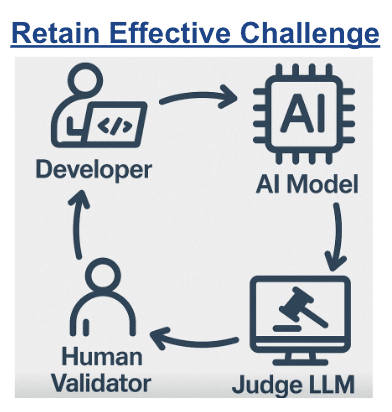

The Concept of AI Teaming

AI teaming, a collaboration between human judgment and AI automation, is essential for effective AI governance. This approach leverages the strengths of both humans and AI, combining human creativity, ethics, and judgment with AI’s scalability, speed, and pattern recognition capabilities. The AI teaming concept already prevalent in sectors like defense, healthcare, and education, where it enhances efficiency and quality.

Implementing AI Teaming in Governance

AI teaming involves a two-phased approach with model developers and validation teams working side by side. This process begins with a template that outlines the model’s objectives, risks, and suggested tests. The iterative process of testing and documentation, supported by AI, results in comprehensive model documentation that is auditable and compliant with regulations.

Continuous Improvement and Feedback

The feedback loop between human teams and AI systems is vital for refining AI governance processes. Human expertise is crucial for validating AI outputs and ensuring compliance with organizational and regulatory standards. This collaboration fosters a self-learning system that adapts to new insights and regulatory changes.

Looking Ahead

AI governance at scale requires a modernized approach that integrates AI teaming to manage the growing complexity of AI models. By automating routine tasks like testing and documentation while maintaining human oversight, organizations can achieve scalable compliance and proactive risk management. Governance frameworks will need to rely on adaptive learning systems and collaborative tools to bridge technical and business teams. The ultimate goal is to ensure that AI systems are not only effective but also aligned with organizational values and regulatory expectations.

This teaming approach empowers organizations to confidently leverage AI technologies while safeguarding against potential risks and ensuring compliance with evolving standards.

Get your copy of David’s paper: Financial institutions need new strategies to govern AI at scale.