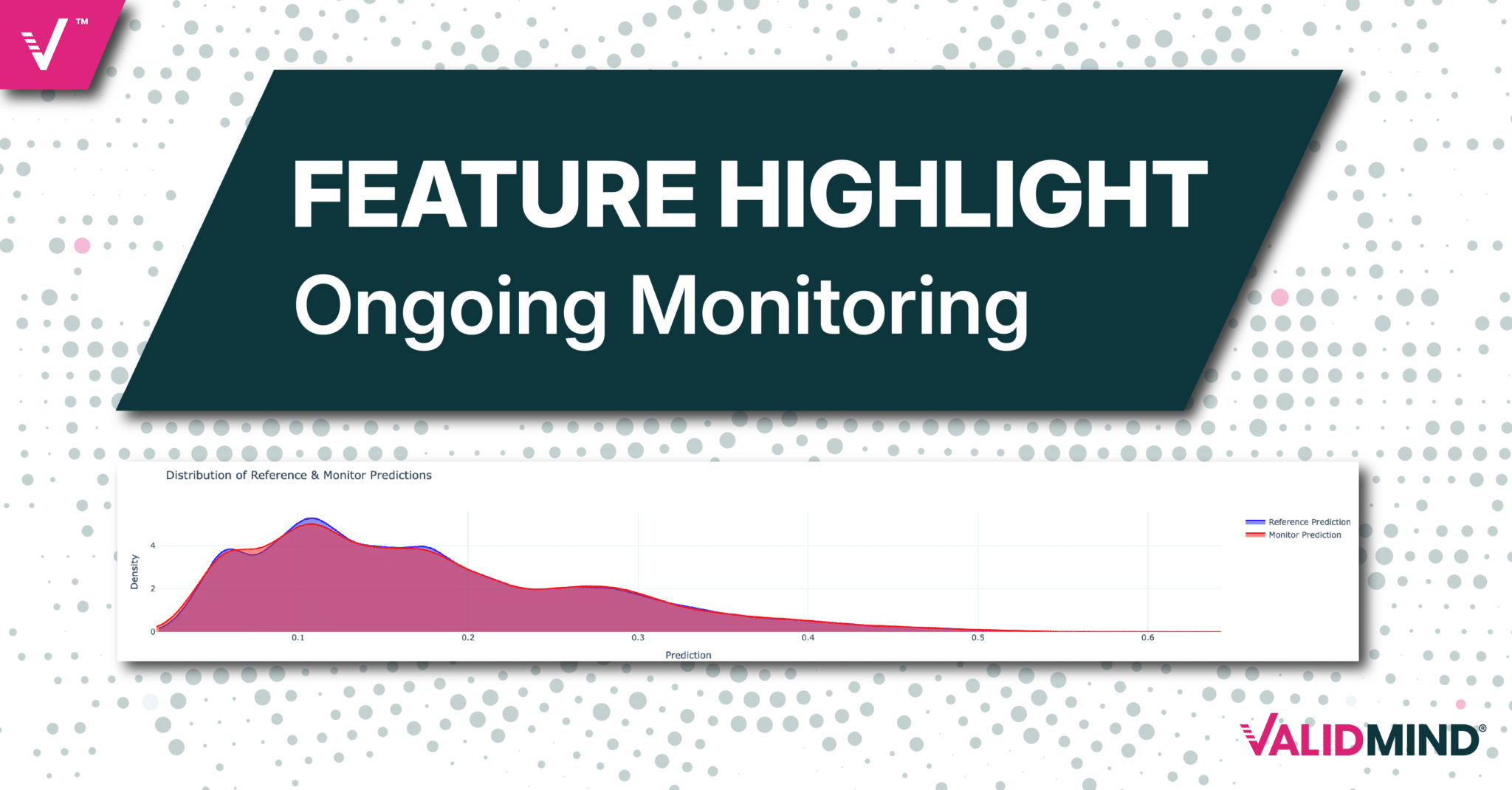

Feature Highlight: Ongoing Monitoring of Models for Regulatory Compliance and Robust Risk Management

In today’s evolving landscape of AI and advanced analytics, ensuring that your models perform as intended is critical for both regulatory compliance and operational integrity. Model risk management doesn’t end at approval and deployment — financial institutions governed by SR 11‑7 and SS1/23, along with those prioritizing strong risk controls, rely on continuous monitoring to uphold performance and stakeholder trust. This post examines how ValidMind’s ongoing monitoring capabilities — particularly our metrics over time feature — enable your risk management teams to identify performance trends and proactively address potential model degradation before it impacts business operations.

Regulatory imperatives

Regulatory frameworks, such as SR 11‑7 and SS1/23, establish a disciplined approach to model risk management, emphasizing ongoing evaluation rather than a “set‑and‑forget” process. Key principles include:

- Continuous performance evaluation — Models must be monitored against benchmarks to detect deviations from expected behavior.

- Independent validation and audits — Regular back-testing and independent reviews ensure model assumptions and outputs remain reliable.

- Comprehensive documentation and governance — Full lifecycle documentation supports transparency, accountability, and regulatory compliance.

- Routine monitoring and escalation — Effective tracking of model performance, with clear protocols to address drift or anomalies.

These requirements reinforce the need for vigilant model oversight. Models evolve with new data, shifting market conditions, and operational changes — making continuous monitoring essential.

Internal risk management

Beyond regulatory requirements, there is a strong internal business case for continuous model monitoring. Firms rely on models for critical decisions and performance degradation can lead to financial and reputational harm. Examples of monitoring in business-critical model use cases include:

Credit models and default rates

Credit risk models determine lending practices and risk exposure but economic shifts, consumer behavior changes, or model drift can undermine their accuracy over time:

- Changing market conditions — A model that once performed well in stable conditions may underestimate risk in a downturn.

- Drift detection — Monitoring helps detect deviations in predicted default rates, prompting timely recalibration.

Fraud models and anomaly detection

Fraud detection models analyze transactions for suspicious patterns, but fraud tactics evolve. Without monitoring, models risk:

- False positives — Flagging legitimate transactions due to shifting customer behavior.

- False negatives — Missing new fraud patterns, creating risk gaps.

AI models

Some of the challenges in AI model risk management are similar to those in conventional MRM but at a much higher level of complexity. The sheer volume of possible GenAI use cases demands robust, ongoing monitoring, as these data-hungry models cannot perform effectively without reliable data:

- Data integrity risks — When data becomes incomplete, inconsistent, or starts drifting, detect potential model degradation early.

- Fairness and bias shifts — Identify emerging disparities in model outputs across demographic groups and address unintended bias as it develops.

- Transparency gaps — Monitor changes in feature influence, decision patterns, or explainability compliance that could affect trust and regulatory adherence.

A rigorous monitoring framework ensures models adapt to changing conditions, keeping risk management strategies effective.nsures models adapt to changing conditions, keeping risk management strategies effective.

Getting started with ongoing monitoring

If you’re ready to integrate model monitoring into your MRM framework, here’s how to get started. While we’ll show you how to use ValidMind for tracking and testing, effective monitoring also requires capturing, storing, and making runtime inputs and predictions available. That data can then be sent to ValidMind for analysis as part of your your broader monitoring framework.

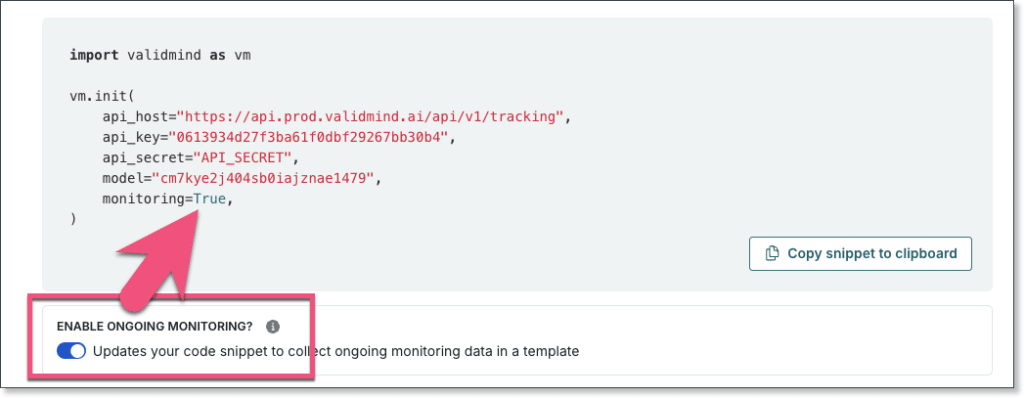

Setting up monitoring in ValidMind

To begin, enable monitoring in ValidMind by configuring your model and then select an ongoing monitoring template:

This setup allows monitoring results to be captured and analyzed over time.

Code samples

To make it easier to get started with ongoing monitoring, ValidMind provides pre-configured templates and code samples as notebooks you can try out or use for your own implementation. The code sample we’re using for this blog post is: Ongoing Monitoring for Application Scorecard.

Have you seen our other code samples? We provide curated Jupyter Notebooks that you can use in your own environment or run in ours.

Reviewing your monitoring results

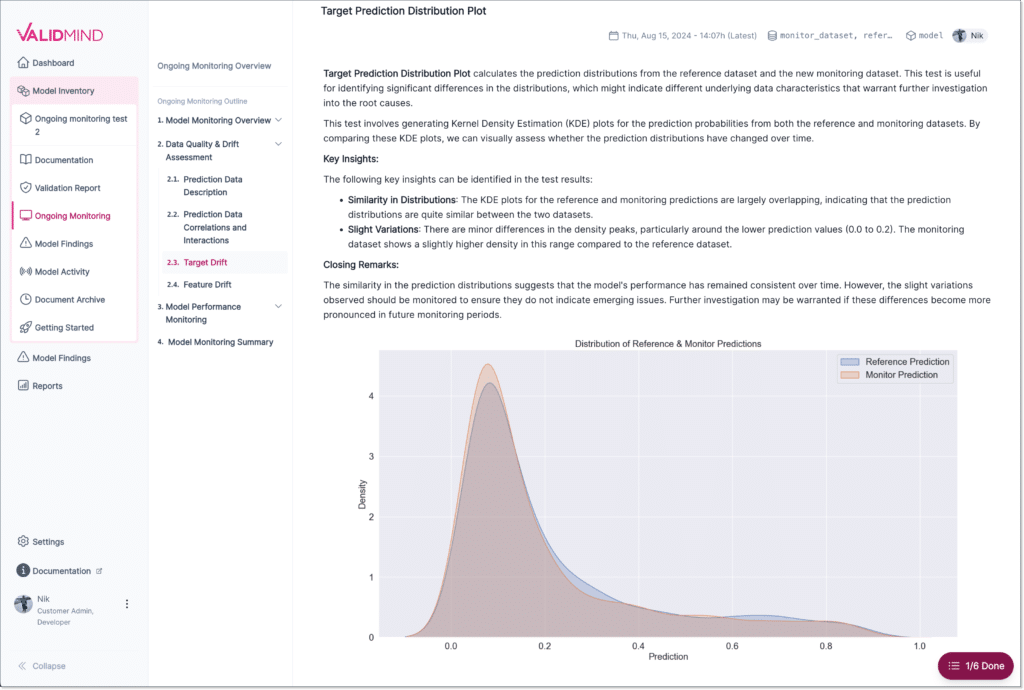

After monitoring is enabled, ValidMind automatically captures the data that you make available to it, providing a clear view of model performance. Results are accessible within the platform, allowing your teams to analyze key trends:

For example, you can use ongoing monitoring to identify:

- Target drift — Compares reference and monitoring datasets to detect shifts in the target variable.

- Feature drift — Identifies changes in input features that may affect model accuracy.

Tracking metrics over time

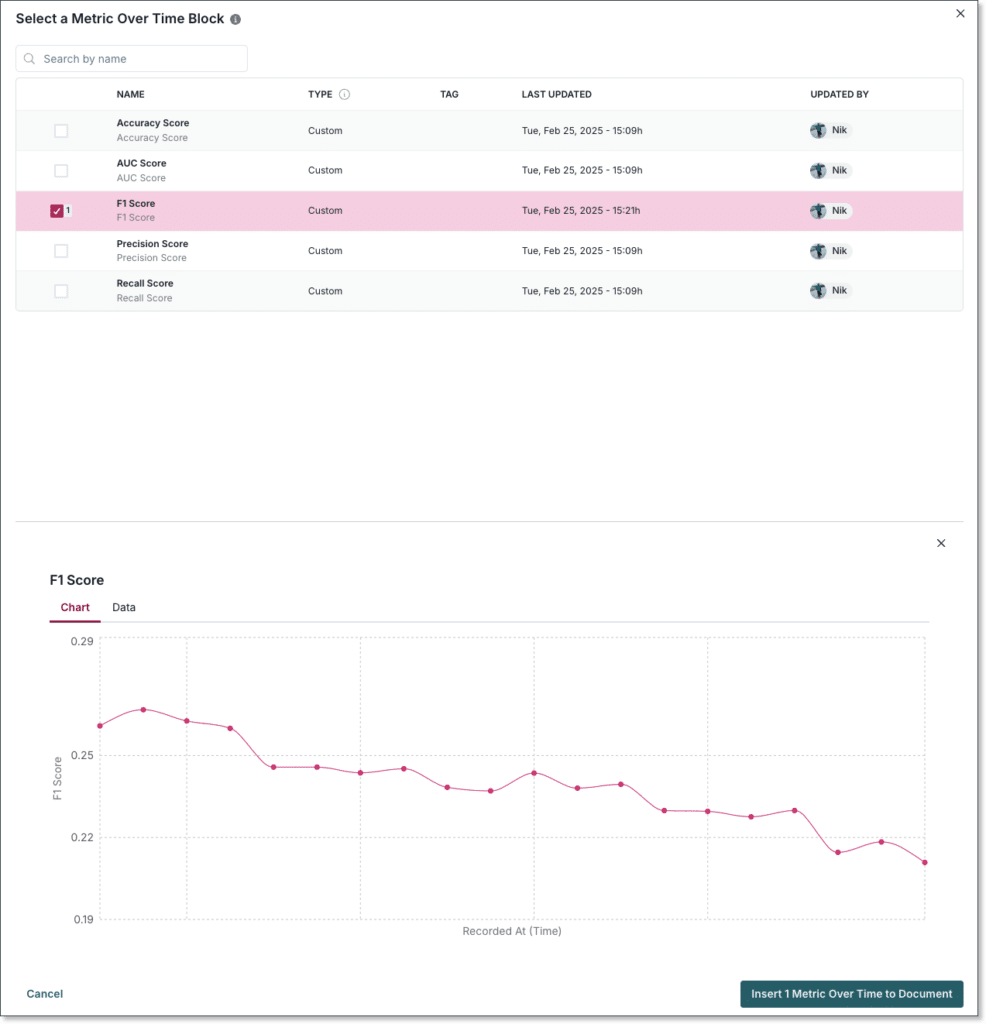

Metrics over time provide insights into model performance trends, enabling early detection of degradation or bias. These metrics are added as content blocks right in your monitoring documentation: Add metrics over time.

ValidMind supports various predefined metrics, such as the F1 Score, which can be added directly to monitoring reports:

Additionally, you have the ability to set thresholds and identify deviations and you can also build your own metrics over time to add to the library. These metrics allow your organization to track how model performance evolves over time, identify trends, and take corrective action when needed.

Metrics over time are most useful if you continue to collect them, so as part of making your model risk management more robust, you should develop an ongoing monitoring plan. This plan can be included with your model documentation on the platform, with clear instructions for execution and use of the results.

Other monitoring scenarios

Beyond post-deployment monitoring, ValidMind supports other key scenarios:

- Pre-approval monitoring — High-risk or regulatory models should undergo a trial phase before deployment to ensure reliability. These trials typically last from a few days to several weeks.

- Monitoring during major updates — When a model is updated, parallel runs compare its performance to the original. Monitoring helps assess improvements, identify risks, and determine if the update should be adopted or refined—critical for regulatory and high-impact models.

ValidMind can track runtime prediction data over time and compare it against baseline data to detect shifts. However, the platform does not generate or store this data directly, as we intentionally do not host your models ourselves.

Key takeaways

Continuous monitoring is more than a regulatory obligation — it is essential for robust risk management. Frameworks like SR 11‑7 and SS1/23 emphasize performance evaluation, validation, and documentation, reinforcing the need for ongoing oversight.

By embedding continuous monitoring into their operations, firms enhance resilience, adapt to market shifts, and maintain trust in their AI systems. ValidMind’s monitoring capabilities help you ensure compliance while mitigating financial and reputational risk by keeping production models performing as intended.