Amplifying your AI Governance expertise with AI Teaming

The conversation around artificial intelligence in regulated industries is shifting from what AI can do to how it can be governed responsibly at scale. According to David Asermely, Vice President of Global Business Development and Partner Strategy at ValidMind, one solution lies at the intersection of AI governance and human-in-the-loop systems: a model where human expertise and judgment is scaled with AI teaming to provide the guardrails to grow safely, adaptively, and responsibly.

Why Human-in-the-Loop Matters for AI Governance

AI models require constant monitoring, validation, and improvement to remain effective.

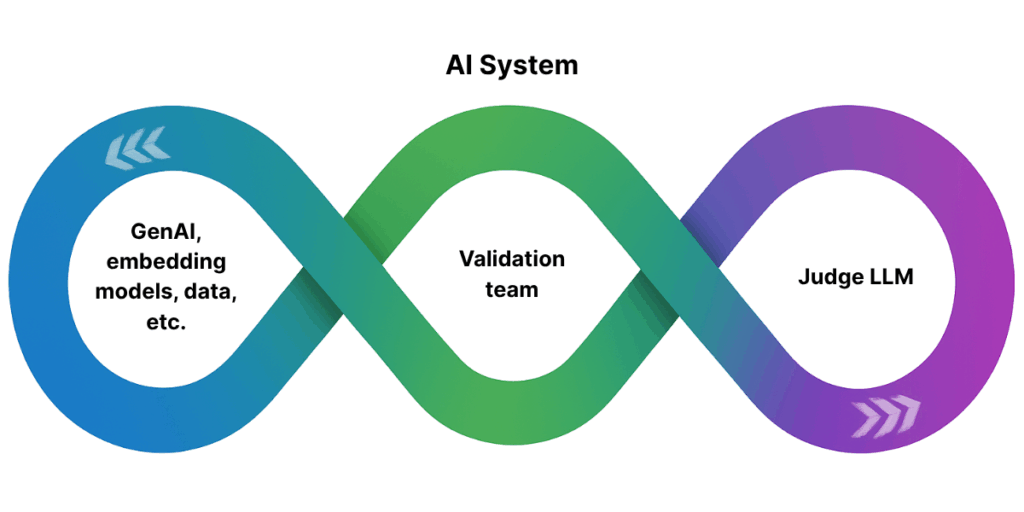

“Human-in-the-loop allows validation teams to AI systems in a way that can scale and improve the model itself,” Asermely says. “This results in an iterative process where the validation teams are providing insights that can be used to improve both the AI Judge agents and the model itself.” As AI Systems evolve responsibly over time, with full auditability and effective challenge, organizations maintain customers’ and regulators’ trust.

The Risks without Human Oversight

A model risk leader from a prominent U.S. bank said recently, “the most robust AI system often can be broken by stupid prompts.” Advanced AI systems remain vulnerable without human judgment guiding their use. Current models are incapable of fully autonomous decision making, and without a human-in-the-loop strategy, institutions risk harmful outputs. Implementing governance guardrails allows scalable human expertise to be built into the process, serving as a safeguard against potential errors.

Navigating The Complexities of Regulations

One challenge that comes with scaling AI responsibly is the evolving regulatory landscape. Rules and expectations vary across countries, and organizations must account for distinct governance requirements in each jurisdiction.

“Organizations operating across different regions and regulatory segments are challenged with having to meet differing expectations”, Asermely states. Building a platform with model inventories and tagging use cases by their geography and industry will allow organizations to compare local regulations with deployed AI systems to identify any potential gaps that need narrowing.

Read more in our recent post: The Next Frontier in Model Risk Management: AI Teaming

Balancing Compliance Across Markets

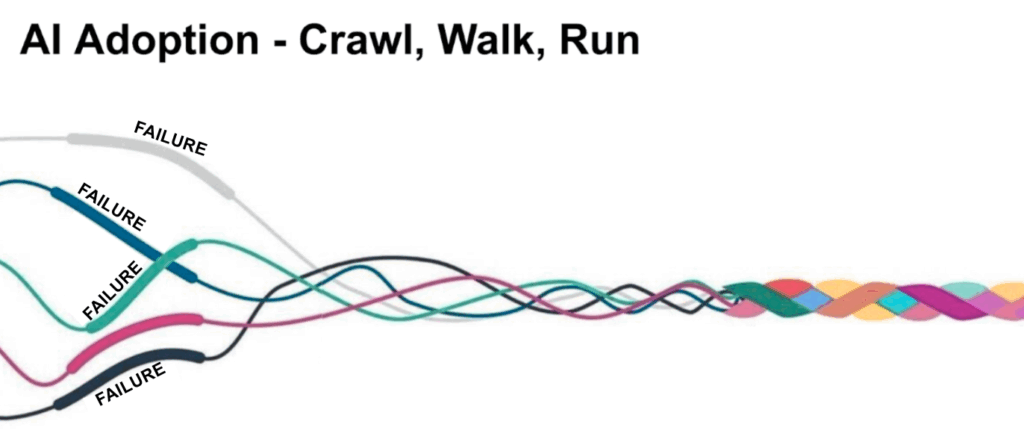

The speed at which an organization adopts AI depends on its regulatory environment. In the more heavily regulated markets, organizations move slower and are cautious about compliance obligations. While this reduces immediate risk, it can also limit institutional learning.

“In many ways, it’s preventing them from learning the hard lessons and building the institutional knowledge needed,” Asermely says. “It’s important to identify the low-risk models that you can build that institutional knowledge on while not being concerned about the regulatory framework on a low-risk model”.

In contrast, organizations in lightly regulated markets often move at “hyperspeed”, which can result in losing control, facing setbacks, or hastily replacing their governance when new laws appear. The lesson here is to avoid both extremes.

“Successful AI Adoption requires feedback driven iteration.”

David Asermely, VP of Global Business Development and Partner Strategy, ValidMind

Designing the ‘Ideal’ AI Adoption Framework

When asked what it would take to govern AI responsibly at scale, Asermely believes that the ideal framework is built on the following foundational pillars:

- Common Repository → a single source of truth for all models and use cases, “what is measured is managed,” and visibility is the starting point for accountability.

- Risk tiering → classifying systems as high, medium, or low risk to guide oversight intensity.

- Organizational alignment → ensuring accountability for who reviews, approves, and signs off on models before deployment.

- Embedding human-AI teaming → incorporating it directly into the process with validation teams inside model feedback loops and making it scalable from low to high risk models.

- Continuous monitoring and detection → to guard against misuse or failure, answering questions such as ‘how will we know when a model fails or requires attention?’

Together, these elements create a scalable governance framework that balances innovation and responsibility.

The Future of Human-in-the-Loop and AI Teaming

The relationship between humans and machines will evolve in line with AI adoption. Asermely suggests reframing human-in-the-loop as AI teaming, a model where human expertise is scaled via purpose built AI Governance agents. These Human-AI Teams share responsibility for oversight, decisions, and improvement. He predicts that high-risk AI systems will require this hybrid approach, where humans provide judgment and AI delivers speed and scale and automates low value tasks.

“High-risk models within an organization will see a combination of AI and humans working collaboratively to make sure the AI is working properly,” Aseremely states. “There are mitigation components for the organization, the end user, and the customer, and then there are intuitive, simplified ways of taking that human feedback and using it to continuously improve the AI”.

AI cannot be governed by technology alone. Adoption depends on frameworks that keep humans at the center, through oversight, validation, or teaming. Organizations must design governance platforms that remain flexible to shifting regulations and markets. Move too slowly, and opportunities will be missed; move too fast, and trust could be lost. The future of AI is not human or machine, but a partnership where each strengthens the other.

See what’s possible when compliance meets innovation. Download our technical brief and start scaling the right way.