E-23 Roundtable: Key Takeaways

As the financial industry continues to navigate the evolving landscape of AI governance and regulatory expectations, the E-23 Guideline has emerged as a critical milestone in shaping how institutions manage model risk. Yesterday, ValidMind hosted a roundtable bringing together model risk leaders from across Canada to share insights, challenges, and practical approaches to implementing E-23. The discussion highlighted a shared commitment to proportional risk management, stronger alignment between first and second lines of defense, and the growing need to integrate AI and data governance frameworks.

This post recaps the key themes and takeaways from the session, from readiness and model tiering to explainability, foundational model oversight, and the future of AI risk governance.

1. Implementation Readiness and Organizational Alignment

Participants reported that E-23 implementation is progressing but uneven across institutions. Many are refining frameworks developed during the draft phase, now incorporating broader feedback from newly engaged stakeholders. Coordination across second-line teams, including AI governance, data risk, and compliance, remains a major focus.

Several attendees stressed the need for clear model identification criteria, distinguishing between models, AI systems, and non-models. The consensus: definitions must be simple, repeatable, and defensible, with risk-tiering as the foundation for effective oversight.

A recommended best practice was to design governance starting from board-level reporting, ensuring clarity around what executives see and how that cascades down through operational processes. This top-down approach helps build engagement and accountability across teams.

2. Risk Methodology and Model Inventory

There was strong agreement that risk-based proportionality is essential — not all models warrant equal scrutiny. Participants emphasized:

- Establishing consistent methodologies for inherent, residual, and contagion risk.

- Integrating data governance directly into model inventory systems, including upstream/downstream linkages.

- Capturing model interdependencies and data sources to support traceability and manage cascading risk effects.

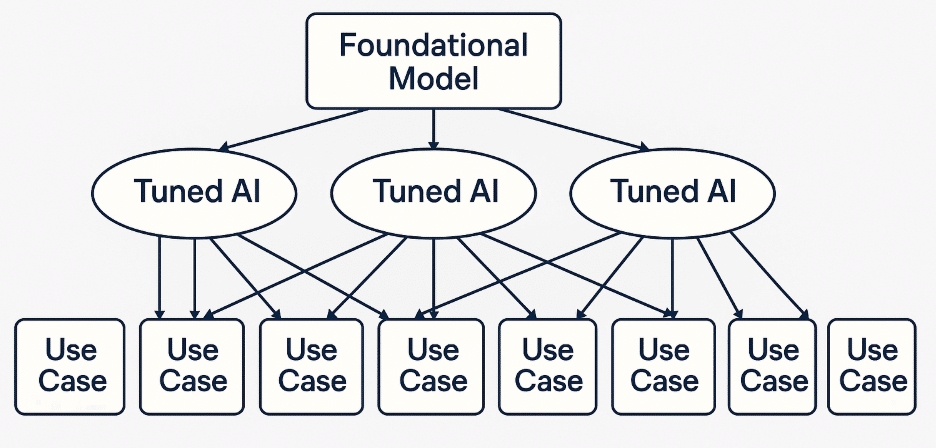

A recurring challenge: defining how foundational and generative AI (GenAI) models fit into inventory and validation frameworks. Some institutions treat foundational models as separate entities with linked downstream use cases, while others group them into unified pipelines. All recognized that frequent model updates and versioning create complexity, necessitating robust model lineage and version control.

3. Foundational and Generative AI Oversight

Most organizations are still determining how to validate and monitor foundational models used within GenAI applications.

Common perspectives included:

- Foundational models should be risk-assessed, and, where feasible, validated, especially when fine-tuned with proprietary data.

- Validation should focus on use-case-specific performance testing, explainability, and reliability.

- Vendor models accessed via APIs pose unique validation and transparency challenges, reinforcing the need for strong testing protocols and documentation of assumptions.

Some organizations have begun tracking model versions and dependencies similar to open-source registries (e.g., Hugging Face), providing granular visibility into usage and risk.

4. Defining AI and Aligning Governance

There was broad consensus that terminology and organizational ownership remain major pain points. “AI vs. ML vs. model” confusion is widespread, complicating communication and governance alignment.

Attendees highlighted the need for:

- Enterprise-wide AI literacy initiatives.

- Inclusion of cross-functional risk partners (cybersecurity, fraud, legal, compliance) early in the model lifecycle.

- Policies that integrate AI considerations into existing governance frameworks rather than treating them as standalone.

The group also discussed expanding validation and review workflows to include these new stakeholders and ensure alignment on risk tiering and accountability.

5. Explainability, Bias, and Human-in-the-Loop Evaluation

Explainability in GenAI remains a key challenge due to the “black-box” nature of large models. Current mitigation strategies include:

- Using benchmark datasets and performance thresholds to reduce subjectivity in human evaluation.

- Experimenting with LLMs-as-judges and visual interpretability tools.

- Implementing temperature controls and prompt management to reduce hallucination risk.

Participants acknowledged that while full transparency is unrealistic, ongoing experimentation, model monitoring, and validation loops can drive incremental improvements.

6. Scaling Oversight: Tiering and Non-Model Governance

As AI adoption scales, validation teams face volume pressures, some now reviewing dozens of models monthly. To balance rigor and efficiency:

- Many organizations are evolving from a three-tier to four- or five-tier risk structure, carving out explicit categories for non-models and lower-risk AI systems.

- Peer review and automation are being explored to support oversight at scale.

- Governance frameworks should enable risk-based prioritization rather than exhaustive validation of all systems.

7. Closing Themes

Participants aligned on several overarching principles:

- Proportionality: focus resources on higher-risk models.

- Integration: embed AI risk governance across business and risk functions.

- Transparency: maintain traceable inventories and clear ownership structures.

- Adaptability: adopt iterative, “crawl-walk-run” approaches, and don’t wait for perfection.

Organizations are encouraged to continue collaboration, share emerging practices, and evolve frameworks as regulatory expectations and technologies mature.